A Complete 2025 Guide to Understanding Data Science – Its Definition, Real-World Applications, Essential Tools, and Career Opportunities

1. Definition: What is Data Science?

The phrase “Data is the new oil” means that data has become one of the most valuable resources in today’s world, much like oil was in the industrial age. Just as oil powered machines, cars, and entire economies, data now powers businesses, technologies, and decisions. However, it is important to understand that data, like oil, is not valuable in its raw form. Raw data is unorganized, messy, and often meaningless on its own. It must be processed, cleaned, and analyzed before it can be turned into something useful.

Think about crude oil. It must go through refining to become fuel, plastic, or chemicals. Similarly, raw data must go through a process to become information or insight. This process includes collecting data from different sources, removing errors or duplicates, organizing it, and then using tools like analysis, modeling, and visualization to extract meaning. Only then does data become valuable and actionable.

Companies and governments collect massive amounts of data every day—from websites, apps, sensors, and devices. But without data science techniques, this data sits unused, just like barrels of crude oil with no refinery. When processed properly, data can help improve products, understand customers, predict trends, and make better decisions. It can be used in healthcare to save lives, in business to increase profits, and in cities to improve transportation and safety.

So while data is indeed the new oil, its true power comes from refining—turning raw data into knowledge, decisions, and innovation.

A Multidisciplinary Field

One of the defining characteristics of data science is that it is inherently multidisciplinary. A data scientist must draw from several areas of expertise:

What are the Main Components of Data Science?

A good Data Science system ensures that:

- Data is collected from reliable sources,

- It is cleaned and organized efficiently,

- Statistical and machine learning models are applied appropriately,

- Results are visualized clearly and communicated effectively,

- Ethical, scalable, and secure practices are followed throughou

- The first step in any data science project.

- It involves gathering raw data from various sources such as sensors, APIs, websites, or files.

- Data may be structured, semi-structured, or unstructured.

- The quality of this data impacts all downstream processes.

- Without data, no analysis is possible.

- This is where collected data is safely stored for access and analysis.

- Storage systems include SQL databases, NoSQL stores, cloud buckets, or data lakes.

- It must be scalable, secure, and accessible to handle growing datasets.

- Good storage design enables efficient querying and processing.

- It forms the backbone of a data infrastructure.

- Raw data is often messy, with errors, missing values, and inconsistencies.

- Cleaning ensures the data is accurate, complete, and usable.

- Techniques include removing duplicates, filling missing values, and correcting formats.

- Clean data leads to trustworthy results.

- It’s often the most time-consuming step.

- EDA helps understand the shape and structure of the data.

- It includes visual summaries and statistical measures like mean, median, and outliers.

- This step uncovers trends, patterns, and anomalies.

- It guides feature selection and modeling decisions.

- Often involves plotting histograms, box plots, or scatter plots.

- This is the process of transforming raw variables into model-ready features.

- It involves creating new features, scaling values, and encoding categories.

- Strong features often boost model performance.

- It blends domain knowledge with creativity.

- Feature quality can outweigh algorithm choice.

- Combines data from multiple sources to create a unified dataset.

- Involves matching schemas, resolving conflicts, and aligning formats.

- Examples include merging CRM data with web analytics.

- Integration ensures completeness and richer insights.

- It’s crucial in real-world, multi-system environments.

- Turns numbers into visual insights.

- Helps communicate findings through charts, maps, and dashboards.

- Makes patterns, trends, and anomalies easier to spot.

- Tools like Tableau, Power BI, and matplotlib are commonly used.

- Critical for both analysis and presentation.

- Applies mathematical techniques to extract meaning from data.

- Common methods include correlation, regression, and hypothesis testing.

- Helps validate assumptions and understand relationships.

- Lays the groundwork for model development.

- Supports data-driven decision-making.

- A method where models learn patterns from data.

- Used for prediction, classification, clustering, and more.

- Algorithms include decision trees, SVMs, and neural networks.

- Requires labeled or unlabeled data depending on the approach.

- Core to many modern data applications.

- Involves fitting a model to historical data and assessing performance.

- Split into training and testing phases to avoid overfitting.

- Metrics like accuracy, precision, and RMSE evaluate outcomes.

- Helps choose the best algorithm for the task.

- Crucial for ensuring reliable predictions.

- A subset of machine learning using complex neural networks.

- Capable of handling image, text, and audio data.

- Learns multiple levels of abstraction through layers.

- Requires large datasets and strong computing power.

- Used in AI applications like facial recognition or translation.

- Focuses on analyzing human language data.

- Used in tasks like sentiment analysis, chatbots, and text summarization.

- Combines linguistics with machine learning.

- Popular tools include NLTK, SpaCy, and Hugging Face.

- Bridges communication between humans and machines.

- Handle extremely large, complex datasets.

- Use distributed computing frameworks like Hadoop and Spark.

- Allow storage and processing beyond traditional databases.

- Enable real-time analysis at scale.

- Essential in industries like e-commerce or IoT.

- Provides flexible, on-demand computing resources.

- Used for data storage, model training, and deployment.

- Eliminates the need for physical infrastructure.

- Popular platforms include AWS, GCP, and Azure.

- Supports scalability and collaboration.

- Ensures data is used responsibly and legally.

- Protects individuals’ rights through consent and transparency.

- Addresses bias, discrimination, and fairness in algorithms.

- Complies with laws like GDPR and HIPAA.

- Vital for trust and accountability.

- Protects data from unauthorized access or corruption.

- Uses encryption, access controls, and secure protocols.

- Important for sensitive data like health or finance.

- Prevents data breaches and loss.

- Supports compliance and user confidence.

- Uses data to inform business decisions.

- Focuses on reporting, KPIs, and dashboarding.

- Tools include Power BI, Tableau, and Looker.

- Answers “what happened” and “why.”

- Often integrated with strategic planning.

- Converts technical findings into clear insights.

- Uses simple language, visuals, and context to explain results.

- Tailored for non-technical stakeholders.

- Helps drive action based on data.

- A vital skill for every data scientist.

- Automates movement and transformation of data.

- ETL = Extract, Transform, Load; ELT = Extract, Load, Transform.

- Ensures consistent, fresh, and usable datasets.

- Tools include Apache Airflow, dbt, and Talend.

- Supports reliability and scalability in workflows.

- Makes trained models available in real-world systems.

- Uses APIs, web apps, or dashboards for access.

- Tools: Flask, Docker, FastAPI, MLflow.

- Brings data science into action.

- Requires monitoring and scalability.

- Ensures model performance doesn’t degrade over time.

- Monitors for drift, anomalies, or changing patterns.

- Triggers retraining when needed.

- Uses tools like Prometheus, Evidently AI.

- Crucial for production systems.

- Supports teamwork, versioning, and planning.

- Uses Git, Jupyter, Jira, and cloud-based platforms.

- Ensures reproducibility and smooth handoffs.

- Encourages agile development and transparency.

- Enables faster, more organized projects.

- Tests two or more variations to measure performance.

- Common in product development and marketing.

- Helps validate changes with real user data.

- Involves randomization and statistical analysis.

- Drives evidence-based decisions.

- Ensures others can replicate your work exactly.

- Includes code, data, methods, and parameters.

- Boosts transparency, trust, and auditability.

- Supports collaboration and compliance.

- A core principle in scientific practice.

- Understanding the industry or field you're working in.

- Guides proper interpretation of data and results.

- Helps define relevant features and goals.

- Avoids misapplication of models.

- Vital for building useful, real-world solutions.

Data Science vs Related Fields?

Comparison Table: Data Science vs Related Fields

|

Field |

Focus |

Tools/Techniques |

Goal |

Example Use Case |

|

Data Science |

Data collection, analysis, modeling, and storytelling |

Python, R, SQL, ML algorithms, dashboards |

Extract insights & predictions from data |

Predict customer churn using transaction history |

|

Machine Learning |

Algorithms that learn patterns from data |

Scikit-learn, TensorFlow, PyTorch |

Train models to predict/classify autonomously |

Detect fraud in banking transactions |

|

Artificial Intelligence (AI) |

Creating intelligent agents that can mimic human behavior |

Neural networks, NLP, computer vision |

Build smart systems that "think" and "act"

intelligently |

Voice assistants like Siri or Alexa |

|

Statistics |

Analyzing data for trends, uncertainty, and relationships |

Hypothesis testing, regression, distributions |

Understand data behavior and test hypotheses |

A/B test to assess the impact of a new feature |

|

Business Intelligence (BI) |

Reporting, visualization, and descriptive analytics |

Tableau, Power BI, SQL |

Inform business decisions using historical data |

Create sales dashboards for executives |

|

Data Engineering |

Building systems to collect, process, and move data |

ETL pipelines, Spark, SQL, Airflow |

Make data accessible and usable for analytics |

Build pipeline to move data from app to warehouse |

|

Computer Science |

Software systems, algorithms, and computing principles |

Java, C++, data structures, OS, compilers |

Build efficient systems and applications |

Create scalable backend for an analytics platform |

|

Big Data |

Handling massive datasets that exceed traditional systems’ capacity |

Hadoop, Spark, NoSQL, Kafka |

Store, process, and analyze large-scale datasets |

Analyze billions of search queries per day |

|

Data Analytics |

Drawing conclusions from data using statistical and visual methods |

Excel, SQL, Tableau, Python (basic) |

Understand trends and make decisions based on data |

Analyze monthly sales to improve strategy |

|

Deep Learning |

Advanced ML with multi-layer neural networks |

TensorFlow, Keras, PyTorch |

Learn from complex, high-dimensional data |

Identify objects in images (e.g., self-driving cars) |

- Data Science is broad and combines elements from many of these fields.|

- Machine Learning and Deep Learning are subsets of AI and heavily used in Data Science.

- BI is about historical reporting; Data Science focuses more on prediction.

- Statistics provides the theoretical basis for modeling in Data Science.

- Data Engineering makes data available, Data Science makes it useful.

- AI is the most ambitious—aiming to simulate human intelligence; Data Science applies AI where needed.

Why Data Science Matters – 30 Powerful Reasons

- Data science replaces guesswork with informed choices by analyzing historical and real-time data.

- Executives and managers rely on insights from data to support strategy, reduce risk, and maximize ROI.

- This enables more consistent, scalable, and justifiable decisions across all business units.

- It helps avoid costly mistakes by validating ideas with empirical evidence.

- Organizations that adopt data-driven cultures outperform competitors significantly.

- Large datasets often contain complex relationships that are invisible without analysis.

- Data science uses statistical tools and algorithms to uncover these patterns, trends, or anomalies.

- This includes customer behaviors, market shifts, and product performance.

- Understanding these hidden insights can lead to better targeting and operational improvements.

- It’s the foundation of many successful AI and analytics solutions.

- By identifying inefficiencies in workflows, data science enables automation and optimization.

- It can streamline logistics, reduce overhead, and enhance productivity across departments.

- Operational dashboards help leaders monitor KPIs and act quickly on performance dips.

- Forecasting helps allocate resources more effectively and avoid bottlenecks.

- In a competitive economy, this translates directly into cost savings and agility.

- Data science powers personalized recommendations, content, and user experiences.

- It analyzes user behavior to tailor suggestions like products, playlists, or news feeds.

- Companies like Netflix and Amazon use it to deliver unique experiences for each customer.

- This boosts engagement, loyalty, and conversion rates significantly.

- Personalization would be impossible at scale without automated data science models.

- Using machine learning and statistical modeling, data science can forecast what’s likely to happen.

- Applications include predicting customer churn, stock prices, or maintenance needs.

- These forecasts guide preventive actions, reducing losses and improving planning.

- Predictive insights also enhance strategic initiatives and scenario testing.

- It shifts businesses from reactive to proactive decision-making.

- Data science reveals what users truly want by analyzing feedback, usage, and market data.

- It helps teams design features, interfaces, and services that better align with real-world needs.

- A/B testing and user behavior modeling inform product iterations.

- Innovative products often emerge from data-driven design rather than intuition.

- This accelerates time to market and increases the chances of success.

- Data science is at the heart of AI systems that simulate human tasks like speaking, seeing, and deciding.

- From self-driving cars to smart assistants, these systems learn from massive datasets.

- Automation reduces human workload and improves accuracy in repetitive tasks.

- It’s especially impactful in fields like manufacturing, healthcare, and customer support.

- Without data science, AI would lack the foundation for learning and improvement.

- By analyzing customer journeys and feedback, companies can detect friction points.

- Chatbots, recommendation engines, and predictive services improve convenience and satisfaction.

- Sentiment analysis reveals how customers feel in real time.

- Happy customers lead to better retention and brand advocacy.

- Data-driven CX strategies create loyalty and long-term value.

- Raw data—unstructured logs, clicks, or texts—has little value on its own.

- Data science transforms this chaos into meaningful visualizations, summaries, and forecasts.

- It uses pipelines, models, and dashboards to produce real-time, actionable insights.

- These insights help identify problems, capture opportunities, and guide improvements.

- This transformation is what gives organizations a true competitive edge.

- Governments and nonprofits use data science to shape policies backed by real-world data.

- It helps track population health, education outcomes, crime rates, and more.

- Data insights ensure policies are impactful, equitable, and efficient.

- They also allow better targeting of public resources and social programs.

- In crises like pandemics, evidence-based decisions save lives and reduce harm.

- Data science enables systems to analyze and react to events as they happen.

- In industries like finance, logistics, and healthcare, real-time data is crucial for timely decisions.

- Examples include fraud detection, traffic routing, and emergency dispatch systems.

- Streaming analytics tools and predictive models process live data streams quickly.

- This minimizes delays and allows organizations to respond to change instantly.

- Data science identifies irregular behavior patterns that may indicate fraud or security breaches.

- It uses anomaly detection algorithms to monitor transactions, access logs, and network activity.

- Banks, insurance firms, and online platforms rely on this to prevent costly losses.

- These systems learn and adapt, becoming better at catching subtle threats over time.

- It enhances trust and protects both organizations and their users.

- Data science supports disease diagnosis, treatment personalization, and hospital resource planning.

- It can predict patient deterioration or readmission using electronic health records.

- Doctors benefit from AI-assisted imaging, risk scoring, and drug discovery models.

- During pandemics, it aids in tracking infections and optimizing vaccination strategies.

- Ultimately, it helps save lives and reduce healthcare costs.

- Cities use data science to monitor and improve services like traffic flow, energy use, and waste management.

- IoT sensors gather real-time data, which is analyzed for better urban planning.

- This leads to reduced congestion, lower emissions, and better public safety.

- Smart infrastructure responds automatically to demand and environmental conditions.

- Data science makes cities more livable, efficient, and sustainable.

- Organizations that leverage data science gain deeper market insights than their competitors.

- They can predict trends, adapt strategies quickly, and deliver better customer experiences.

- This agility allows them to outpace rivals in pricing, marketing, and innovation.

- Companies like Google, Amazon, and Tesla rely on data science for this edge.

- It’s now a strategic necessity, not just a technical tool.

- Data science speeds up discovery in fields like genomics, physics, and astronomy.

- Researchers use algorithms to process enormous datasets that are too complex for manual analysis.

- This enables breakthroughs in areas such as drug development or space exploration.

- It also helps validate theories through simulation and experimentation.

- Science has become more data-driven than ever before.

- Marketers use data science to understand audience behavior and fine-tune their strategies.

- It helps segment users, determine the best channels, and predict campaign ROI.

- Targeted advertising based on analytics results in better engagement and conversion.

- A/B testing and customer journey mapping improve messaging effectiveness.

- This leads to smarter spending and higher returns.

- Data science forecasts demand, optimizes inventory levels, and reduces supply chain delays.

- It helps companies avoid stockouts or overstocking, improving cash flow.

- Real-time analytics monitors shipping, warehousing, and distribution operations.

- Route optimization saves fuel and time in logistics networks.

- This ensures efficiency and customer satisfaction at every step.

- Financial institutions rely on data science for credit scoring, portfolio analysis, and fraud prevention.

- Models predict market movements and assess risk across various investment strategies.

- Insurance companies use it to estimate claim likelihood and pricing.

- It supports regulatory compliance through detailed reporting and audit trails.

- This minimizes financial risk and maximizes profitability.

- Schools and edtech platforms analyze learning data to tailor education for each student.

- It helps identify struggling learners early and recommend personalized interventions.

- Curriculum effectiveness can be assessed with measurable outcomes.

- Predictive analytics supports admissions, dropout prevention, and program design.

- This creates a more engaging, adaptive, and successful learning environment.

- Data science helps businesses analyze market trends, consumer behavior, and competitor strategies.

- By mining publicly available data—like social media, reviews, and pricing—it identifies business opportunities.

- This allows companies to anticipate shifts and adapt faster than competitors.

- Competitive intelligence tools powered by data science help in positioning, timing, and strategy.

- Ultimately, it transforms raw external data into strategic advantages.

- Natural Language Processing (NLP) and computer vision—branches of data science—enable machines to “understand” humans.

- Applications include voice assistants (like Siri or Alexa), smart chatbots, and image recognition systems.

- Data science enables real-time interpretation of text, speech, and gestures.

- It improves the intuitiveness and usefulness of modern digital tools.

- These technologies are shaping how people interact with devices, apps, and environments.

- Properly designed data science systems can counteract personal or institutional biases.

- By using objective data rather than subjective judgments, decisions can be fairer and more consistent.

- For example, algorithms can help detect hiring bias or lending discrimination.

- However, ethical implementation and bias auditing are critical to success.

- Used responsibly, data science promotes equity and transparency.

- Climate scientists use data science to analyze weather, CO₂ emissions, sea levels, and deforestation trends.

- Models simulate future climate scenarios and predict natural disasters.

- Satellite and sensor data help track environmental changes in real-time.

- This aids governments and organizations in sustainability planning and disaster preparedness.

- It's vital for global climate action and policy development.

- Data science enhances investigative journalism through data visualization, scraping, and analysis.

- It powers tools that verify claims, trace misinformation, and expose fraud.

- Reporters use it to sift through public records and databases efficiently.

- It enables data-driven storytelling that’s more transparent and trustworthy.

- This helps maintain the integrity of information in the digital age.

- Smart factories use data science to automate production, monitor quality, and optimize workflows.

- Predictive maintenance models prevent costly equipment breakdowns.

- Sensors and IoT devices stream data that algorithms analyze in real time.

- This boosts productivity, reduces downtime, and cuts operational costs.

- Data science is central to modern industrial transformation.

- Legal firms and corporate compliance teams use data science to review contracts and detect anomalies.

- Natural Language Processing can extract key terms from thousands of documents.

- Risk assessment models help identify areas of legal vulnerability.

- Regulators also use analytics for surveillance and fraud detection.

- It saves time, reduces errors, and ensures regulatory alignment.

- Teams analyze player performance, opponent strategies, and injury risk using data science.

- Wearables and sensors generate real-time physiological data.

- This informs training, game tactics, and even player recruitment.

- Fans also benefit through personalized content and in-depth game analysis.

- Data science turns sports into a measurable, optimized science.

- NGOs and aid organizations use data to locate needs, allocate resources, and track impact.

- During disasters, data science supports early warning systems and efficient response.

- Crowdsourced and geospatial data help map crisis zones and deliver targeted relief.

- It improves transparency and accountability in humanitarian work.

- From poverty to pandemics, data science enables smarter action for social good.

- As automation and AI spread, data science is central to the evolving workforce.

- It drives demand for new roles—like data engineers, ML specialists, and AI ethicists.

- Industries are rethinking processes, business models, and workforce skills.

- Organizations embracing data science become more adaptive and future-ready.

- In the digital economy, it's a foundational pillar of transformation.

Applications of Data Science Across Industries

- Predict disease outbreaks and patient outcomes

- Assist in diagnostics and personalized treatments

- Optimize hospital operations and resource allocation

- Credit scoring and loan risk assessment

- Fraud detection using anomaly models

- Algorithmic trading and customer segmentation

- Personalized product recommendations

- Inventory management and demand forecasting

- Customer lifetime value prediction

- Campaign targeting and optimization

- Social media sentiment analysis

- A/B testing and consumer behavior modeling

- Predictive maintenance and quality control

- Process automation and supply chain analytics

- Sensor data monitoring (IoT-enabled)

- Route optimization and traffic prediction

- Fleet management and fuel efficiency analysis

- Demand forecasting for ride-sharing and shipping

- Personalized learning and curriculum design

- Dropout prediction and student performance tracking

- Academic research and policy analysis

- Crop yield prediction and soil analysis

- Weather pattern forecasting

- Precision farming using satellite data

- Consumption forecasting and smart grid optimization

- Predictive maintenance of equipment

- Renewable energy output modeling

- Recommendation systems (e.g., Netflix, YouTube)

- Audience engagement analysis

- Content trend prediction

- Census analysis and public service planning

- Crime prediction and resource deployment

- Crisis response and disaster management

- Threat detection using behavioral analytics

- Intrusion detection and real-time alerts

- Risk scoring and identity verification

- Player performance tracking and optimization

- Game strategy development using analytics

- Fan engagement and merchandising strategies

- Customer churn prediction

- Network optimization and failure detection

- Personalized service offerings

- Price prediction and investment analysis

- Smart city planning using geospatial data

- Property recommendation engines

Popular Tools and Technologies in Data Science

1. 1. Programming Languages

- Python – Most widely used language for data

science, thanks to libraries like Pandas, NumPy, Scikit-learn, TensorFlow,

and PyTorch.

- R – Powerful for statistical analysis and

data visualization; preferred in academia and research.

- SQL – Essential for querying and managing

structured data in relational databases.

- Julia – High-performance language gaining

popularity in numerical and scientific computing.

2. Data Handling & Processing

- Pandas – Python library for data manipulation

and analysis.

- NumPy – Core Python package for numerical

computing.

- Apache Spark –

Distributed computing engine for big data processing.

- Dask – Parallel computing in Python for

larger-than-memory datasets.

3. Data Visualization Tools

- Matplotlib –

Basic charting library in Python.

- Seaborn – Built on Matplotlib; provides

beautiful statistical plots.

- Plotly – Interactive, web-based visualizations.

- Tableau – Powerful BI tool for interactive

dashboards and reports.

- Power BI – Microsoft’s dashboarding and reporting

tool, widely used in business environments.

4. Machine Learning Libraries & Frameworks

- Scikit-learn –

Simple and effective tools for traditional ML algorithms.

- TensorFlow –

Google’s open-source deep learning library.

- Keras – User-friendly neural network API

running on top of TensorFlow.

- PyTorch – Facebook’s deep learning library,

preferred for research and flexibility.

- XGBoost / LightGBM –

High-performance gradient boosting tools.

5. Cloud Platforms

- Google Cloud Platform (GCP) –

Offers BigQuery, AI Platform, and AutoML for data science at scale.

- Amazon Web Services (AWS) –

Includes SageMaker, Redshift, and EC2 for model building and deployment.

- Microsoft Azure –

Azure ML Studio and cloud integration for enterprises.

- Databricks –

Unified data analytics platform based on Apache Spark.

6. Data Engineering & Workflow Tools

- Apache Airflow – For

scheduling and monitoring data pipelines.

- dbt (data build tool) – For

transforming data in your warehouse.

- Kafka – Real-time data streaming and

messaging.

- Snowflake – Cloud-based data warehouse for fast

and scalable analytics.

7. Version Control &

Collaboration

- Git & GitHub – For

version control and code collaboration.

- Jupyter Notebooks –

Interactive coding environment for Python.

- Google Colab –

Cloud-based Jupyter alternative with GPU support.

8. MLOps & Deployment Tools

- MLflow – Tool for managing the ML lifecycle:

experiments, deployment, and tracking.

- Docker – Containerization tool to package

models for deployment.

- Streamlit / Gradio –

Tools to create simple web apps for ML models.

- FastAPI / Flask –

Lightweight web frameworks for deploying models as APIs.

Popular Tools & Technologies in Data Science – Summary Table

Category

Tool / Technology

Purpose / Description

🧪 Programming Languages

Python

General-purpose language with strong data science libraries

R

Language for statistical computing and data visualization

SQL

Query language for managing structured data in databases

Julia

High-performance language for numerical/scientific computing

📦 Data Handling & Processing

Pandas

Data manipulation and analysis in Python

NumPy

Numerical computing with multidimensional arrays

Apache Spark

Distributed processing of big data

Dask

Parallel computing for large datasets in Python

📊 Visualization Tools

Matplotlib

Basic plotting library for Python

Seaborn

Statistical visualization on top of Matplotlib

Plotly

Interactive, browser-based visualizations

Tableau

BI platform for dashboards and visual storytelling

Power BI

Microsoft’s tool for business analytics and reporting

🤖 Machine Learning Libraries

Scikit-learn

Classical ML algorithms in Python

TensorFlow

Deep learning library by Google

Keras

High-level API for building neural networks

PyTorch

Flexible deep learning library by Facebook

XGBoost / LightGBM

Gradient boosting frameworks for high-performance ML

☁️ Cloud Platforms

AWS (SageMaker, Redshift, etc.)

End-to-end data science services and hosting

Google Cloud Platform (GCP)

BigQuery, AutoML, Vertex AI, and more

Microsoft Azure

Cloud-based ML and data solutions

Databricks

Unified analytics platform built on Apache Spark

🛠️ Data Engineering Tools

Apache Airflow

Workflow automation and scheduling

dbt (data build tool)

SQL-based transformation of data in warehouses

Kafka

Real-time data streaming and message handling

Snowflake

Cloud-based data warehouse for fast analytics

📁 Collaboration Tools

Git / GitHub

Version control and collaborative coding

Jupyter Notebooks

Interactive development environment for Python

Google Colab

Free cloud-based Jupyter alternative with GPU support

🔒 MLOps & Deployment Tools

MLflow

Manages ML lifecycle and experiment tracking

Docker

Containerization for consistent deployment

Streamlit / Gradio

Simple web apps for model demos and interfaces

FastAPI / Flask

Frameworks to deploy ML models as APIs

|

Category |

Tool / Technology |

Purpose / Description |

|

🧪 Programming Languages |

Python |

General-purpose language with strong data science libraries |

|

R |

Language for statistical computing and data visualization |

|

|

SQL |

Query language for managing structured data in databases |

|

|

Julia |

High-performance language for numerical/scientific computing |

|

|

📦 Data Handling & Processing |

Pandas |

Data manipulation and analysis in Python |

|

NumPy |

Numerical computing with multidimensional arrays |

|

|

Apache Spark |

Distributed processing of big data |

|

|

Dask |

Parallel computing for large datasets in Python |

|

|

📊 Visualization Tools |

Matplotlib |

Basic plotting library for Python |

|

Seaborn |

Statistical visualization on top of Matplotlib |

|

|

Plotly |

Interactive, browser-based visualizations |

|

|

Tableau |

BI platform for dashboards and visual storytelling |

|

|

Power BI |

Microsoft’s tool for business analytics and reporting |

|

|

🤖 Machine Learning Libraries |

Scikit-learn |

Classical ML algorithms in Python |

|

TensorFlow |

Deep learning library by Google |

|

|

Keras |

High-level API for building neural networks |

|

|

PyTorch |

Flexible deep learning library by Facebook |

|

|

XGBoost / LightGBM |

Gradient boosting frameworks for high-performance ML |

|

|

☁️ Cloud Platforms |

AWS (SageMaker, Redshift, etc.) |

End-to-end data science services and hosting |

|

Google Cloud Platform (GCP) |

BigQuery, AutoML, Vertex AI, and more |

|

|

Microsoft Azure |

Cloud-based ML and data solutions |

|

|

Databricks |

Unified analytics platform built on Apache Spark |

|

|

🛠️ Data Engineering Tools |

Apache Airflow |

Workflow automation and scheduling |

|

dbt (data build tool) |

SQL-based transformation of data in warehouses |

|

|

Kafka |

Real-time data streaming and message handling |

|

|

Snowflake |

Cloud-based data warehouse for fast analytics |

|

|

📁 Collaboration Tools |

Git / GitHub |

Version control and collaborative coding |

|

Jupyter Notebooks |

Interactive development environment for Python |

|

|

Google Colab |

Free cloud-based Jupyter alternative with GPU support |

|

|

🔒 MLOps & Deployment Tools |

MLflow |

Manages ML lifecycle and experiment tracking |

|

Docker |

Containerization for consistent deployment |

|

|

Streamlit / Gradio |

Simple web apps for model demos and interfaces |

|

|

FastAPI / Flask |

Frameworks to deploy ML models as APIs |

Common Data Science Workflow

- Before any data is touched, the project starts by clearly understanding the problem or objective.

- This includes identifying the business goal, success metrics, and expected outcomes.

- Is the problem predictive (e.g., “Will this customer churn?”), descriptive (e.g., “What happened?”), or prescriptive (e.g., “What should we do?”)?

- It requires discussions with stakeholders, domain experts, and project owners.

- Misunderstanding the problem at this stage can lead to wasted effort and misleading conclusions.

- Data scientists ask clarifying questions to ensure alignment with business needs.

- Defining the scope and constraints early helps set realistic goals.

- This phase also defines the target variable if it's a supervised learning task.

- Deliverables may include a problem statement document and a set of business KPIs.

- It lays the foundation for the entire workflow.

- Once the problem is defined, the next step is gathering relevant data.

- This can involve structured data (databases), unstructured data (text, images), or semi-structured data (logs, JSON).

- Data may come from internal systems (CRM, ERP), external APIs, public datasets, or surveys.

- In modern pipelines, data engineers often help set up data pipelines or streaming sources.

- It's essential to collect high-quality data that truly represents the problem domain.

- Data access permissions, compliance, and privacy must be considered (e.g., GDPR).

- Web scraping or APIs may be used when open-source or real-time data is needed.

- The more relevant and diverse the data, the better the modeling outcomes.

- In many real-world cases, data availability shapes the kind of analysis or model possible.

- This stage concludes with raw data stored and documented for further processing.

- Raw data is often messy, incomplete, inconsistent, or duplicated.

- Data cleaning involves handling missing values, fixing formatting issues, correcting outliers, and deduplicating records.

- It also includes converting data types, standardizing units, and validating entries.

- Missing values may be imputed using statistical methods or machine learning.

- Outliers are identified using visualization or statistical thresholds.

- Noise reduction ensures better feature representation and model performance.

- Data preparation also includes data transformation, normalization, and aggregation.

- This phase is time-consuming but critical — many say 70-80% of a data scientist’s time is spent here.

- Without clean data, even the best models will fail to perform or generalize.

- This stage ends with a refined dataset ready for exploration and modeling.

- EDA helps understand the structure, patterns, and relationships within the dataset.

- It involves using visual tools (histograms, scatter plots, box plots) and statistics (mean, variance, correlation).

- This step identifies potential features, redundant variables, or data imbalances.

- It also helps detect biases, anomalies, or seasonality in the data.

- Correlation matrices and pair plots are common tools to spot multicollinearity or relationships.

- EDA can inspire new hypotheses and guide the feature engineering process.

- The goal is to form a mental map of the data’s story before modeling.

- It also provides insights into the scale of variables and distributions.

- Jupyter Notebooks, Seaborn, and Plotly are often used for interactive EDA.

- This phase may lead to iterative cycles of going back to clean or re-collect data.

- This step involves creating new variables (features) that better represent the underlying problem.

- Good features can dramatically improve model performance.

- Feature engineering includes encoding categorical data, creating interaction terms, or generating time-based features.

- Techniques like one-hot encoding, scaling, polynomial features, and domain-specific transformations are used.

- Text data might be converted to numerical format using TF-IDF or word embeddings.

- Images might be processed into pixel arrays or feature vectors.

- Sometimes features are aggregated across groups to capture higher-level patterns.

- It may also involve dimensionality reduction (e.g., PCA) if there are too many variables.

- A deep understanding of the domain often leads to insightful, valuable features.

- This stage makes raw data model-ready and meaningful.

- Here, machine learning or statistical models are trained on the prepared dataset.

- The model choice depends on the problem type: regression, classification, clustering, etc.

- Algorithms like Linear Regression, Random Forest, XGBoost, SVM, and Neural Networks are common.

- Multiple models may be tested to compare performance.

- This stage also includes data splitting into training, validation, and test sets.

- Hyperparameters are tuned to optimize performance.

- Cross-validation helps avoid overfitting and ensure model generalizability.

- Frameworks like Scikit-learn, TensorFlow, and PyTorch are commonly used.

- The goal is to find a model that learns patterns well and performs consistently.

- Outputs include saved model files and performance metrics.

- After training, the model’s performance is rigorously evaluated using specific metrics.

- Classification tasks might use accuracy, precision, recall, F1-score, or ROC-AUC.

- Regression tasks use RMSE, MAE, or R² to assess error and fit.

- Confusion matrices and precision-recall curves provide deeper insight into performance.

- The model is tested on unseen data (test set) to check generalization ability.

- Overfitting and underfitting are checked by comparing training vs. test accuracy.

- Bias-variance trade-off is considered to strike a balance between simplicity and performance.

- For imbalanced data, metrics like precision-recall are preferred over accuracy.

- Evaluation may also include stakeholder review for business relevance.

- If results are unsatisfactory, the workflow loops back to data preparation or feature engineering.

- Once validated, the model is deployed into a production environment for real-world use.

- Deployment can be done through APIs, web apps, batch jobs, or embedded systems.

- Tools like Docker, FastAPI, Flask, or cloud services (AWS, GCP, Azure) are used.

- CI/CD pipelines help automate deployment, testing, and rollback.

- Monitoring is essential to ensure the model performs well in real-world data.

- Scalability, latency, and uptime are technical considerations during deployment.

- Models may be integrated into dashboards, apps, or automated workflows.

- Versioning is important to track changes and updates.

- This phase bridges the gap between experimentation and practical value.

- A successful deployment means the model is now actively supporting decisions.

- Post-deployment, the model needs ongoing monitoring to ensure continued effectiveness.

- Data drift (changes in input data patterns) or concept drift (changes in output behavior) must be tracked.

- Performance metrics are recalculated regularly to catch degradation.

- Alerts may be set for unexpected outputs, errors, or performance drops.

- Logs and dashboards help data scientists stay informed about system health.

- Models may need retraining periodically with fresh data.

- Failing to monitor can lead to poor decisions or broken systems.

- Monitoring also ensures fairness, transparency, and compliance over time.

- Feedback loops help improve the model based on user behavior.

- Maintenance is not optional—it’s an essential part of responsible AI.

- Throughout and especially at the end, findings must be communicated clearly.

- This includes visualizing results, sharing insights, and explaining model behavior.

- Dashboards, slide decks, executive summaries, and reports are common formats.

- Technical and non-technical audiences both need to understand the implications.

- Data storytelling bridges the gap between model complexity and actionable insight.

- Visualizations make abstract patterns tangible and easier to digest.

- Communicating uncertainty and limitations is as important as reporting success.

- Stakeholder engagement ensures the solution is trusted and adopted.

- Good communication helps align data science outcomes with business strategy.

- Ultimately, it transforms technical results into decision-making tools.

Challenges in Data Science

Building data infrastructure, acquiring tools, hiring talent, and maintaining pipelines can be expensive, especially for smaller organizations.

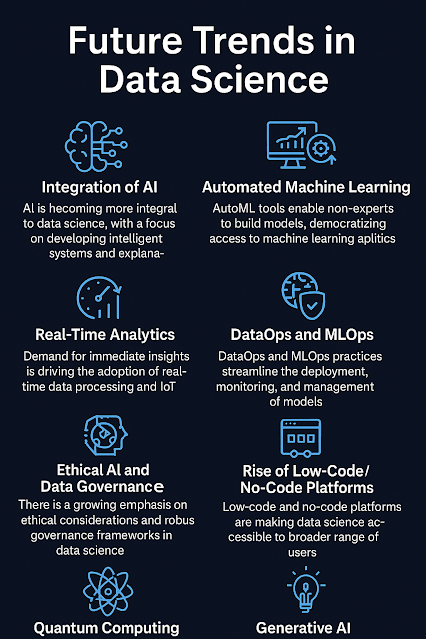

Future Trends in Data Science

The future of data science is poised for remarkable evolution as technology, data, and societal needs grow more complex. One of the biggest trends is the integration of artificial intelligence (AI) into every aspect of data science. With more accessible deep learning libraries and powerful hardware, data scientists are building more intelligent systems that not only predict outcomes but also explain them — giving rise to Explainable AI (XAI), which improves transparency and trust.

1. Understand What a Data Scientist Does

Before starting, know what the role involves: a mix of statistics, programming, domain knowledge, and communication.

Data scientists clean and analyze data, build predictive models, visualize insights, and help businesses make data-driven decisions.

2. Build a Strong Educational Foundation

-

Recommended Degrees: Computer Science, Statistics, Mathematics, Data Science, or Engineering.

-

Self-Taught Path: Many succeed via online platforms (Coursera, edX, Udemy, YouTube) and open resources.

Key concepts to learn early: probability, statistics, linear algebra, and calculus.

3. Learn Key Programming Languages

-

Python is the most used language in data science.

-

R is also powerful for statistical analysis.

-

Learn SQL for querying data from databases.

Familiarity with tools like Jupyter Notebooks, Git, and Excel is also helpful.

4. Master Data Handling and Analysis

-

Learn how to collect, clean, and manipulate data using libraries like Pandas and NumPy.

-

Understand how to perform Exploratory Data Analysis (EDA) to find patterns and trends.

-

Practice building visualizations using Matplotlib, Seaborn, or Plotly.

5. Study Machine Learning

-

Learn supervised and unsupervised learning algorithms like linear regression, decision trees, clustering, and SVMs.

-

Progress to deep learning with TensorFlow or PyTorch when you're comfortable.

-

Understand model evaluation, cross-validation, and overfitting.

6. Work on Real Projects

-

Apply your knowledge to real-world datasets (e.g., Kaggle, UCI Machine Learning Repository).

-

Build projects like customer segmentation, churn prediction, or stock price forecasting.

-

Publish your work on GitHub and write blogs or reports on Medium or LinkedIn.

7. Develop Domain Knowledge

Specializing in areas like finance, healthcare, marketing, or sports can make your skills more valuable.

Understanding the business context improves model relevance and impact.

8. Learn Data Science Tools

Familiarize yourself with tools used in real-world data science:

-

Tableau / Power BI for dashboards

-

Apache Spark for big data

-

Docker, MLflow, Airflow for deployment and workflows

-

Google Colab / Jupyter for notebooks

9. Build a Portfolio and Resume

-

Showcase your projects, GitHub code, and any certifications.

-

Highlight problem-solving skills, creativity, and impact.

-

Customize your resume for each job and use clear, result-focused language.

10. Apply, Network, and Keep Learning

-

Apply for internships, junior roles, or freelance gigs.

-

Join data science communities (like Kaggle, Reddit, or local meetups).

-

Stay updated with blogs, YouTube channels, and new tools.

Data science evolves fast — lifelong learning is essential.

How to Start a Career in Data Science

Whether you're a student, recent graduate, or someone changing careers, here’s a clear roadmap to break into data science — even without prior experience.

1. Learn the Basics of Data Science

Start by understanding what data science is all about: combining math, statistics, programming, and domain knowledge to extract insights from data.

Read blogs, watch YouTube videos, or take free intro courses on platforms like Coursera or edX to get a feel for the field.

Grasp core concepts like data types, probability, hypothesis testing, and basic visualizations.

2. Build Foundational Skills

Master the essential tools used in data science:

-

Programming: Start with Python (easier for beginners) and SQL for databases.

-

Statistics & Math: Understand concepts like distributions, correlations, regression, and matrix operations.

-

Data Analysis: Learn libraries like Pandas, NumPy, and Matplotlib.

-

Version Control: Get familiar with Git and GitHub.

3. Take Online Courses or Bootcamps

Enroll in beginner-to-intermediate courses that cover end-to-end data science workflows.

Great platforms include:

-

Coursera (IBM Data Science, Google Data Analytics)

-

Udemy, DataCamp, edX, and Kaggle Learn

Bootcamps are also a good option for hands-on, fast-paced learning.

4. Work on Real Projects

Apply your learning on real datasets from:

-

Kaggle Datasets, UCI Machine Learning Repository, or open government data portals

Create projects like: -

Predicting housing prices

-

Customer segmentation

-

Sentiment analysis

Share your code and results on GitHub.

5. Build a Portfolio

Your portfolio is your digital resume.

Include 3–5 well-documented projects that show end-to-end thinking: from problem definition to model deployment.

Add visualizations, writeups, and insights — not just code.

Consider writing blog posts to explain your work clearly.

6. Earn Certifications (Optional but Helpful)

Certifications validate your skills and make your resume stand out. Examples:

-

IBM Data Science Certificate

-

Google Data Analytics Certificate

-

AWS Certified Machine Learning

These can help build credibility, especially if you lack formal education in the field.

7. Network and Engage with the Community

Join online communities like:

-

Kaggle

-

Reddit r/datascience

-

LinkedIn groups or local meetups

Participate in competitions, hackathons, and open-source projects to gain exposure and mentorship.

8. Prepare for Job Applications

Craft a tailored resume with:

-

Skills and tools you know

-

Projects with clear outcomes

-

Any certifications or coursework

Prepare for technical interviews by practicing: -

SQL queries

-

Probability & statistics questions

-

Python problems

-

Machine learning concepts and case studies

9. Apply for Internships or Entry-Level Roles

Look for roles like:

-

Data Analyst

-

Business Intelligence Analyst

-

Junior Data Scientist

Even if the title isn’t “Data Scientist,” the experience will build your foundation and resume.

10. Keep Learning & Stay Updated

Data Science is always evolving — new tools, trends, and models emerge regularly.

Subscribe to newsletters (e.g., Towards Data Science), follow leaders on LinkedIn, and continuously expand your skillset (e.g., deep learning, MLOps, NLP).

Common Data Science Roles & Responsibilities

Understanding the diverse roles

in data science can help aspiring professionals find their path. Below is a

structured overview of common roles, responsibilities, and the primary tools

they use:

|

|

Responsibilities |

Key Tools |

|

Data Analyst |

Analyze trends, create visualizations,

and generate reports and dashboards |

Excel, SQL, Tableau, Python |

|

Data Scientist |

Develop models, extract insights, solve

complex business problems |

Python, R, scikit-learn, Jupyter, Pandas |

|

Machine Learning Engineer |

Build, train, deploy, and scale ML models

into production systems |

TensorFlow, PyTorch, Docker, Kubernetes |

|

Data Engineer |

Design and maintain data pipelines and

data infrastructure |

Spark, Hadoop, Airflow, SQL, Scala |

|

Business Intelligence Analyst |

Transform data into actionable insights

through dashboards and reports |

Power BI, Tableau, Looker |

|

MLOps Engineer |

Automate and manage ML lifecycle,

including deployment and monitoring |

MLflow, Kubeflow, AWS Sagemaker, Azure ML |

Data Scientist salaries across top 10 leading countries

Rank

Country

Avg Salary (USD)

1

USA

$120,776–$156,790

2

Switzerland

$143,360–$193,358

3

Australia

$107,446–$125,500+

4

Denmark

$175,905 (PPP-adjusted)

5

Luxembourg

$150,342

6

Canada

$80,311–$101,527

7

Germany

$81,012–$109,447

8

Netherlands

$89,000–$98,471

9

Belgium

$125,866

10

UK

$76,438–$85,582

11

Singapore

$82,197–$88,000+

12

UAE

$86,704

13

New Zealand

$86,082

14

France

$76,900–$97,883

15

Israel

$88,000–$94,706

16

Japan

$54,105–$70,000

17

China

$46,668

18

Brazil

$50,832

19

South Africa

$35,419–$44,436

20

India

$16,759–$21,640

|

Rank |

Country |

Avg Salary (USD) |

|

1 |

USA |

$120,776–$156,790 |

|

2 |

Switzerland |

$143,360–$193,358 |

|

3 |

Australia |

$107,446–$125,500+ |

|

4 |

Denmark |

$175,905 (PPP-adjusted) |

|

5 |

Luxembourg |

$150,342 |

|

6 |

Canada |

$80,311–$101,527 |

|

7 |

Germany |

$81,012–$109,447 |

|

8 |

Netherlands |

$89,000–$98,471 |

|

9 |

Belgium |

$125,866 |

|

10 |

UK |

$76,438–$85,582 |

|

11 |

Singapore |

$82,197–$88,000+ |

|

12 |

UAE |

$86,704 |

|

13 |

New Zealand |

$86,082 |

|

14 |

France |

$76,900–$97,883 |

|

15 |

Israel |

$88,000–$94,706 |

|

16 |

Japan |

$54,105–$70,000 |

|

17 |

China |

$46,668 |

|

18 |

Brazil |

$50,832 |

|

19 |

South Africa |

$35,419–$44,436 |

|

20 |

India |

$16,759–$21,640 |